Autobotography Intent – AustinH

This is subject to change – slightly or heavily – over spring break, but for now I’m leaning towards something like this:

For my autobotography idea, I’m leaning towards creating some audiovisual piece in Max MSP, or at the very least some generative audio piece. I’m not certain on the specifics beyond that, but the gist of the concept is the same regardless of the execution: some form of user input via a computer will generate audio tones of varying qualities (pitch, amplitude, spatialization, etc.) and, as the input will be some form of sequential distinct inputs, these tones will vary relative to each other. Together, they will be recorded as a single audio file; a singular composition created from the natural, unprompted input of the user(s).

I aim to create a kind of distributable autobiographical material by way of digital audio/electronic music composition arranged by means of the natural motions of the user. At present, I don’t have a creative title in mind for the project.

In one example, a user could run the resulting application (or at the very least, interact with a computer simultaneously running the Max patcher [program in its shell]) and be asked to go about their usual web-routine; check the various sites they would normally check, respond to emails, submit school or work materials, etc. In the process, while muted, the program/patcher would playback a range of different tones depending on what key on the keyboard is pressed, when the period key is pressed, what key is pressed after it, when the mouse is clicked, when the mousewheel scrolls. At a predesignated point or after an allotted amount of time, the program would conclude and, having recorded the resulting sounds from the initial tone forward, export the new composition to the desktop.

In another, a user could be presented with a program that renders a live video feed from the computer’s webcam and a static grid or other interface overlayed on top of the video. Depending on how the user interacts with this overlayed interface, using motion from their own body/hands, different sounds will playback, all while audible to the user. Once the user generates the first sound, recording will begin, and end when the user exits the program, after the program finished exporting the file.

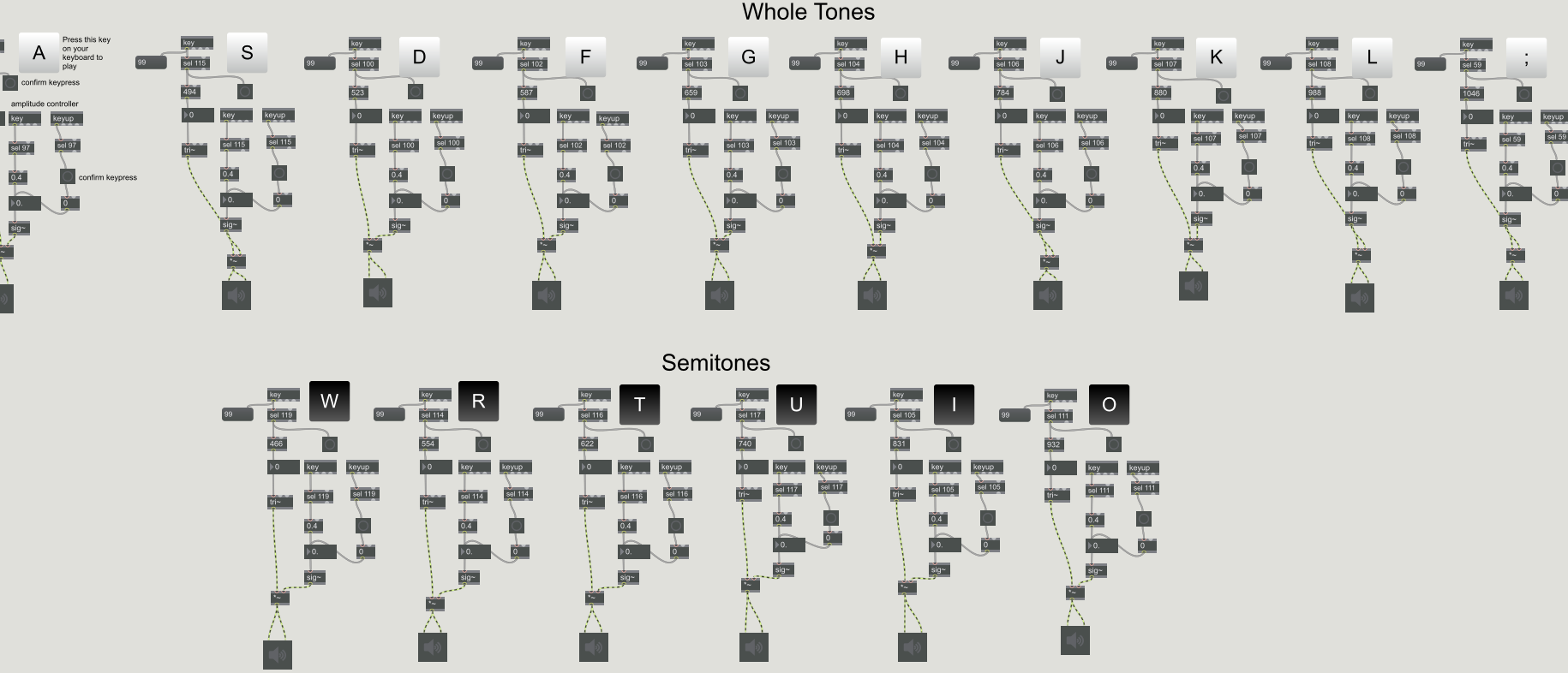

Below is a polyphonic synthesizer I’ve made in Max that functions using the home row of keys on a computer keyboard, and a few keys above it, to play a little over one octave starting from A4 (frequencies equivalent to a piano). This can be expanded to encompass every key on a standard keyboard and include all whole tones and semitones and switch between the two on the fly. Multiple tones can also be assigned to any one key and change according to the keys that precede it.

Budget: $10 per month for a Max MSP subscription. This could potentially span three months of work, until May, so $30 would possibly be the sum total expense.

Timeline: With a deadline of early May, work would be divided as follows, broadly:

-March: Research on Jitter, Max’s video processor.

-Early-mid April: execution of individual project components (sound generation, input registration, etc.)

-mid April to Early May: combining working components and testing stability in the final piece.

One thought on “Autobotography Intent – AustinH”

You must be logged in to post a comment.

I mentioned how they take radio emissions and apply it to sound. I feared that the concept may have been misinterpreted in class and wanted to extend the idea further. Perhaps you can do something with background tasks that the user doesn’t have direct control over. Maybe you can capture the amount of data transfer you incite with hard drive usage or some sort of variable rolling roar from CPU usage. I wonder how you might be able to capture each CPU and have their activities translated into choir voices. I think it might be interesting that you could be capturing not only the user’s direct actions but also what happens as a result of their actions.